Subscribe to Blog via Email

Good Stats Bad Stats

Search Text

April 2025 S M T W T F S 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 -

Recent Posts

Good Stats Bad Stats

goodstatsbadstats.com

Parity in the NFL

I sometimes wonder it editors read the stories they publish. I sometimes wonder if writers even think for a few minutes about the data they use when they write their stories. This morning I was greeted with the headline*:

Five of the six NFC playoff clubs from a year ago are under .500. Parity reigns, proving the adage any team can beat any other…..ON ANY GIVEN SUNDAY”

Further on in the article I read:

The league has long taken pride in its ability to reshuffle the deck each year, mixing up the standings

I could go about trying to compute the probability of the split with five of the six teams having a losing records through the first three games. That would not be an easy task and would necessitate using a number of assumptions that could be easily criticized.

That is not necessary, the problem with their concussions becomes apparent a few paragraphs later. There we learn that all six of the playoff teams from the AFC have winning records through the first three games of the season.

Oops. Whatever we learned about year to year parity in the NFL has been turned on it’s head. Now it looks, based on the AFC games, like nothing has changes from one year to the next.

Somehow the writer and the editor did not recognize that what they thought they could show using the NFC data was directly contradicted by the AFC data.

*Note that once again the Washington Post has elected to confuse everyone by using one title for their print edition and an entirely different title for their online edition of the same article.

Posted in Methodolgy Issues, Statistical Literacy, The Media

The probability of making the baseball playoffs

The other evening I heard that the Washington Nationals had a two percent change of making the playoffs. Naturally I wondered how someone had computed that probability. That led me to the site coolstandings.com where all the numbers for all the teams are laid out for everyone to see. What is really nice is the educational aspect of what they have done.

They set up two models for computing the probabilities – the “smart” model and the “dumb” model. The “dumb model assumes that each team has an even chance of winning a given game. The “smart” model takes into account the past performance of each team.

They set up two models for computing the probabilities – the “smart” model and the “dumb” model. The “dumb model assumes that each team has an even chance of winning a given game. The “smart” model takes into account the past performance of each team.

And the really nice feature is that you can compare the results from the two models over time. The differences are quite big. Currently, as of Sunday morning, September 22, the odds of Tampa Bay making the playoff is 63.6% under the “smart” model, but a much better 76.8% under the “dumb” model. On the other hand Cleveland has a 78.3% percent change under the “smart” model and only 65.8% under the “dumb” model. Why the differences. It all comes down to which teams they will play over the final week of the season.

Play with the data a bit. Look at the trends. Oh, and the Washington Nationals, their chances are now at 1.3% dispute having won every game since I first heard that they had a 2% chance the other evening. Their problem in short that they have to win just about every game while either Pittsburgh or Cincinnati collapse in this final week.

Posted in Telling the Full Story

Understanding reported changes in median income

This is the time of year when the Census Bureau releases data on the income and health in the United States. It is also a time when in the rush to tell the American people what is happening in the nation and in their own communities that the media gets the story very badly wrong.

Today’s Washington Post included an article titled: “Virginia’s median household income takes hit, census data indicate.” The article is based on data from the American Community Survey and released in the recent Census Bureau Report with the somewhat deceptive title: “Household Income: 2012.” It contained data on median household income for states and selected metropolitan areas for 2000, 2011 and 2012.

The post report started with the statement

Virginia’s median household income fell more than 2 percent last year, the most significant drop in the country at a time when most states saw their incomes go flat, according to Census Bureau figures.

With this one line the reporters and editors at the Washington Post showed a clear failure to understand the very basics of statistical analysis.

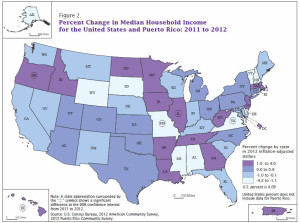

So what went wrong. In table 1 of the Census Bureau report the year to year changes in household median income are reported. The numbers are shown graphically at the right using the image in the report. From the graphic and from the table we can see that there were six states where the year to year change in median household income from 2011 to 2012 was statistically significant. All this means is the estimated changes were large enough and the sample size was also large enough in six states so that statisticians using classical testing methods would be willing to say that the medians actually changed in those states. Such measures say nothing about the analytically significant changes. They also do not measure which states had the largest changes.

So what went wrong. In table 1 of the Census Bureau report the year to year changes in household median income are reported. The numbers are shown graphically at the right using the image in the report. From the graphic and from the table we can see that there were six states where the year to year change in median household income from 2011 to 2012 was statistically significant. All this means is the estimated changes were large enough and the sample size was also large enough in six states so that statisticians using classical testing methods would be willing to say that the medians actually changed in those states. Such measures say nothing about the analytically significant changes. They also do not measure which states had the largest changes.

In a companion article the reporters partially got the concept and said

By this yardstick, there were significant income increases for 2012 in Hawaii, Illinois, Massachusetts and Oregon. There were significant declines in Missouri and Virginia.

Here they are specifically referring to “statistical significance.” The problem is that the readers are not versed in the details of the statistical methods. What they gather from the article as they are written is that Virginia had the biggest and most significant loss in the median household income between the two years. What the actual estimates themselves show are decreases of 2.6% in AK, 3.0% in DE, 2.2% in SD, 4.2% in WY. None of these differences are “statistically different” from zero. But they are the best estimates available and by just about any statistical standard the true differences in these other four states are likely larger than the difference measured for the state of Virginia. Any claim the Virgina had the “the most significant drop in the country” cannot be justified.

I must also fault the Census Bureau on their report. Part of the problem they have is with the strict use of classical statistical methods. For the graphic and the table they used the 90% level of confidence in their testing. However there are 50 states being compared year to year. With 50 comparisons an expected five changes would be “statistically significant.” To see six states flagged with significant changes is no surprise to the trained statistician. To highlight those six changes gives them a credibility beyond their due. The statisticians reading this might argue with me on this, but they are not the ones reading the report. Rather it is writers, politicians and the untrained public that are the intended audience for the report. As such the report must be written to that group of people. When the reporters write “There were significant declines in Missouri and Virginia” the general reader has no idea that they are talking about statistical significance and not analytical significance.

Lest one think that those the east coast were the only ones who did not understand the data. The Oregon Public Broadcasting group seemed to be rejoicing in the increase in median household income in that state. At least they managed to find a commenter who was not so sure of the results – or at least was a bit surprised by them.

This entire episode highlights the dangers of focusing on the extreme values in any analysis. Generate 50 random numbers from any distribution, do a statistical test with a 90% confidence and likely you will be able to conclude that five those numbers are “statistically different” from the mean of the underlying distribution. The ones that are “statistically different” are the ones that will be highlighted in the media as doing far better or worst than most of the other states.