Subscribe to Blog via Email

Good Stats Bad Stats

Search Text

May 2025 S M T W T F S 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 -

Recent Posts

goodstatsbadstats.com

AJC Analysis of School Testing Data – Is There Cheating?

This past Sunday the Atlanta Journal Constitution published a major article titled: “Cheating our Children.” This was a follow-up to their investigation into cheating on school tests in the Atlanta, Georgia a few years back. This time they tacked the difficult task of looking for evidence of cheating beyond the Atlanta area.

Let me first commend the folks at the AJC on being willing to take on this important question and for the amount of effort and work they have put into the analysis. They have a good appreciation of the scope and impact cheating on school tests can have on students, teachers and administrators. They also understand the motivations behind the cheating they have uncovered in the past.

They very clearly state that the analysis does not demonstrate cheating has occurred in any specific school district. But at the same time it is very clear that they do feel that significant cheating has occurred and that the data they have gathered demonstrates that. Those may seem to be inconsistent statements but they clearly state that they cannot envision other alternative explanations for the results of their analysis. To get a feel for this tension it is well worth listening to the webinar they did on Tuesday, March 27th.

The key question that must be answered is did this analysis show anything any different than what would have been seen by pure chance had there been no cheating? I am forced to conclude that not enough information has been provided about the statistical methods that were used to answer that question in the affirmative. I must ask of the 169 school districts that the AJC flagged as suspicious how many were simply false positives in the statistical sense and how many are true problem districts where cheating has taken place? I am not asking for the status of specific school districts, but for the relative size of the two groups. The analysis does not at this stage provide any hint of what those numbers are. Any methodology must assure that the number of false positive is kept as small as possible without sacrificing the ability to identify the true problem districts. That issue has not been addressed in the report and that must be viewed as a major shortcoming.

Based on what the ACJ posted about the methodology I have several reservations with the analysis at this point. Lack of documentation of the methodology makes it very difficult to adequately asses the accuracy of the conclusions reach by the reporters at the AJC. It is entirely possible the many of my criticisms will be answered when and if the AJC fully discloses their methodology and the data they have used. They have provided an overview of the methodology they used. Some details of the methods is scattered throughout their reports, the webinar, and the Get Schooled blog. A response to criticism on their handling of the issue of student mobility is posted at that blog. My primary concern is that the methods have not been disclosed in sufficient detail to evaluate the accuracy of both the analysis and the claims that are being made. In the webinar AJC reporters said “the actual data gets in the way of the larger story (message) here.” What I feel they have failed to appreciate is that the foundation for the story is the data and the on the methodology they used. Without out adequate ability for that analysis to be independently evaluated the AJC will be subject to continuing criticisms. For without the data there is no story. Without the analysis there is no story.

The base data set is comprised of test results by calendar year, by class year within a school, by subject. Year to year changes in test scores for each cohort were computed. They tabulated 1.6 million such observations. These observations were then grouped by state, year, and test (math and reading – I believe) into about 2,400 clusters. Regressions were run for each of these 2,400 clusters. The regression observations were weighted by the number of students in the class. They provided no information on the regression themselves beyond this. They did not specify the any of the independent variables used in the regression. Nor did they provide any of the usual measures that inform on the appropriateness for the results from regression analysis. The lack of that information is a major deficiency in the reported results and makes it impossible to evaluate the quality of the models used.

A critical variable that should have been included in the models at this point would be the mobility of students from year to year as the variance of the dependent measure – change in test scores – is directly dependent on the overlap in students from year to year. The the weighting that was done would have been dependent on both the class size and the amount of year to year overlap.

One aspect of the analysis I would like to know more about at this point is what I would call the “clustering effect.” I fully expect that there to be schools which behave differently from other schools. I fully expect there to be real difference between school districts. In fact the core of the argument the AJC is making is that there are differences between school districts due to cheating. There are many other reasons for differences in behavior between school districts. These differences should be incorporated into the models. There is no indication that this was done.

My next question has to do with the data themselves. I do not know how many classes they followed over the years at a given school. The AJC reporters did not provide that information. But if one class is followed for three years then they have three observations for the same cohort. This will result in two observations being used in the regression. They would have the difference between year 1 and year 2 and also the difference between year 2 and year 1. So the same students (less the student mobility factor) are present in two of the observations. This creates a correlation in the observations that are used in the regression. That must be incorporated into the modeling process.

The next step creates additional issues in the analysis. They then flagged classes based on a standard statistical t-test that were outside the expected range “with a probability of less than 0.05.” This was their starting point for identifying outliers. They did not, but should have, provided the number of schools that were flagged at this point. It is important to realize that even if there was no cheating going on and the models were perfect descriptions of the testing situation approximately 5% of the classes would be flagged by this method. This flagging would reflect not evidence of cheating but just pure random change. If more than 5% or less than 5% of the classes were actually flagged it could be either due to real cheating in some schools or it could be because of inadequately defined models.

Using the set of classes flagged the AJC reporters then identified school districts where cheating may have occurred “by calculating the probability that a district would, by random chance, have a number of flagged classes in a year, given the district’s total number of classes and the percentage of classes flagged statewide.” This may appear to be a rigorous method. The posting of school districts selected in this class indicates otherwise. For example for Dallas, TX they used data from 2006, 2008, 2009 and 2011. However the database tool provides data for Dallas for provide data for 2008, 2009, 2010, and 2011. Why, I must ask was 2010 excluded from the discussion, and why was 2006 included? This behavior creates suspicion about how the cities were selected. It also raised serious questions when they claim the odds of what they saw in Dallas were “1 in 100 billion.” Those odds are very much dependent on how Dallas was selected and how which set of year’s data was selected for the school district. Given this I am left with no description that adequately describes how the “potential problem” districts were identified.

Let me reiterate what I said above about the flagging of the schools. With this methodology it is important to realize that even if there was no cheating going on and the models were perfect descriptions of the testing situation a subset of the school districts would still be flagged with this methodology. All would be identified just due to random chance. A core question then is was a district flagged due to normal random chance, due to differences inherent in the School District, or due to cheating. The methodology is only useful if the major portion of the School Districts flagged were flagged due to cheating. There is no evidence provided to support the conclusion that that is what happened.

Let me return now to the statement in the methodology description that the AJC provides under the “How We Did It” tab:

“we looked for improbable clusters of unusual score changes within districts by calculating the probability that a district would, by random chance, have a number of flagged classes in a year.”

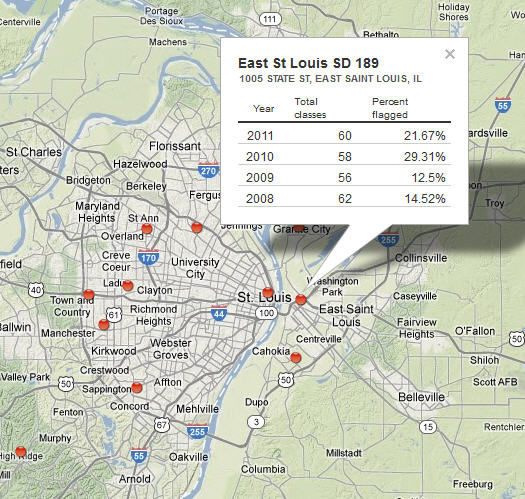

The image to the right, captured from the AJC database, shows the situation in the East Saint Louis School District. In their description the reporters claim that the probability of this situation had odds of 1 in 100 billion. The calculation of these odds is straight forward. The total number of class over the four years from 2008 to 2011 was 236. Under the model used at the AJC they expected 5% of these to be flagged. This is 12 classes. They found that 46 classes were flagged. The 1 in 100 billion is the probability of seeing 46 or fewer classes flagged give that a 5% flag rate was expected.

There are weaknesses in this calculation. First, for other cites they did not use the four year time span. When they did the analysis for the Gary, IN school district they used 2006 and 2007 data for the analysis. It is not clear why they did not use 2008 to 2011 data there as it might have given the same conclusion. But if one is allowed to choose what years to use in the analysis then the probabilities of the outcome change and will be higher as there are more opportunities for the event to occur. Be aware also that these calculations all assume that the model was correctly specified. Errors in the model will impact the probabilities. It is also assumes that each test, in each class, in each year within a school is an independent event. This is a very strong assumption for the data set the AJC used. If a single teacher cheats in multiple years or multiple classes, or an administrator cheats then this assumption is violated. Also if an administrator develops an effective teaching method and implements it the assumption has been violated. The simple presence of the same teacher the class room for multiple classes creates correlations that then violate the independence assumption.

Another key, but subtle feature, in computing the probability that the outlier event occurred is that it focuses on just one school district at a time. Regardless of which school districts were the outliers in their analysis the discussion would have homed in on those school districts. There were 14,723 school districts in the study. That is 14,723 chances for the outlier event to occur. That in turn increased the probability of the event by a factor of 14,723. Yes, the probability for East Saint Louis is as calculated, but only when just East Saint Louis is considered alone. However, had it been Pittsburgh, PA instead, the analysis would have honed in on that school district likely making a similar claim. There are multiple opportunities to make the same claim when there are over 14,000 school districts. So the probability for an outlier of the size seen in East Saint Louis is much higher.

There are other weaknesses in the approach that the AJC has used. It cannot detect consistent cheating. If the entire school buys into a culture of cheating each year then the school will forever appear in the data like any other high performing school. It is only when a change occurs that this type of analysis can flag a problem school or school district. The approach is also not conductive to detecting cheating at individual schools. There is just not enough data for an individual school to effectively utilize the approaches used by the AJC.

That leads me to return to my key comment. Did this analysis show anything any different than what would have been seen by pure chance had there been no cheating? At this point not enough information has been provided about the statistical methods that were used to answer that question in the affirmative.

It is my hope that when the AJC provides a full description of their methodology and the full dataset as they indicated they would be doing in the Tuesday webinar, that their findings will be substantiated and that as a result of the work they have done the many difficult and complex issues surrounding testing in the nation’s schools can more usefully address by all parties. The nation suffers when students do not get the education they both need and deserve.

Posted in Methodolgy Issues